Project Summary

Overview

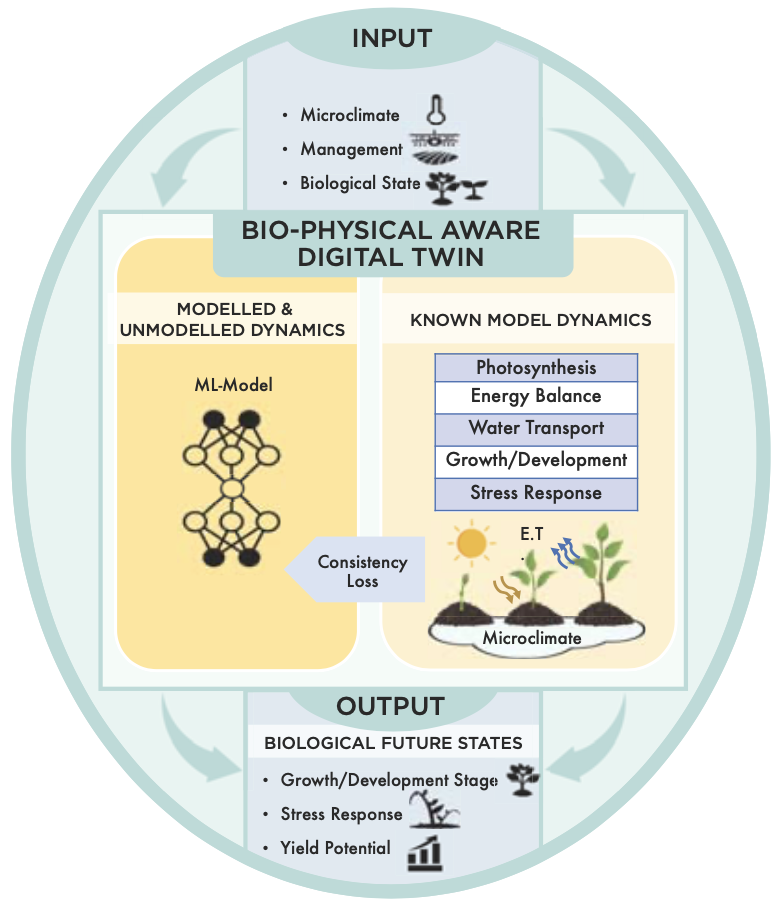

A predictive digital twin is a virtual simulation of connected biological entities that continuously assimilate sensor updates to mirror the life cycle of its corresponding biological system. AIIRA will deliver theory, algorithms, and tools (software and hardware) for the principled creation of AI-driven predictive digital twins at the individual plant scale, as well as the plot and field scale. The advancements directly apply to climate resiliency, sustainability, and producer profitability; coupled with a social science thrust to maximize stakeholder understanding, thrust, and acceptance. Our research is driven by three different research thrusts and two cross-cutting research thrusts.

Research

Our research Thrusts bring together expertise from diverse disciplines to build and deploy digital twins. Each Thrust is designed to address goals and objectives to build plant and field scale predictive models through foundational AI advances, to deploy plant and field scale predictive models for breeding and crop production applications, and to understanding and resolving social barriers to, and AI innovations for adoption of the AI technology in the agricultural ecosystem through completing interdisciplinary projects highlighted in this section.

AIIRA Research has lead us to develop five distinct moonshots that span our expertise available across our research thrusts.

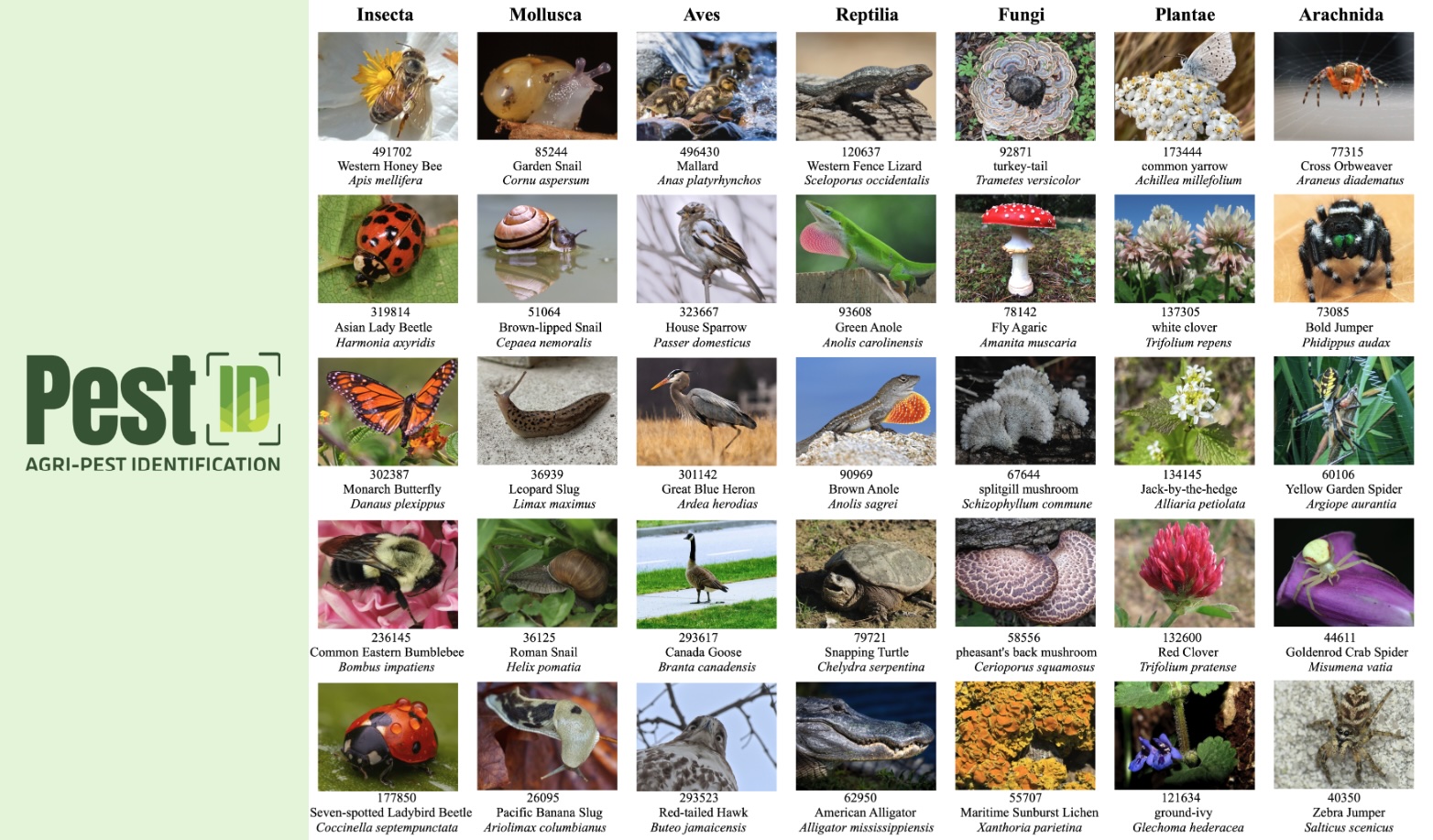

Moonshot 1: AI Agents for Pest Identification and Mitigation

This moonshot focuses on the development and application of AI-driven agents designed for the identification, classification, and mitigation of pests and diseases in agricultural settings. This past year, our research within this cluster highlights the integration of advanced machine learning techniques, sensor technologies, and domain-specific knowledge to address challenges in pest management.

AI-driven pest identification systems leverage sophisticated machine learning models, including deep learning and self-supervised learning, to accurately detect and classify pests and diseases that threaten crops. For instance, the papers on self-supervised learning for classifying agriculturally important insect pests explore how training models on unlabeled data can significantly improve their performance in real-world scenarios. This approach allows for the continuous improvement of pest identification systems without the need for extensive manually labeled datasets, making the models more adaptable to different environments and crop types.

Sensor-based pest detection is another critical component within this cluster. Advanced sensors, such as plant virus-specific nanocavities, enable the rapid and precise detection of pathogens at an early stage, which is crucial for effective pest management. These sensors can be integrated with AI agents to provide real-time monitoring and alert systems, allowing for timely interventions that can prevent widespread crop damage.

The research on zero-shot and few-shot learning introduces methods that allow AI systems to recognize new pest species or diseases with minimal training data. This is particularly valuable in dynamic agricultural environments where new threats can emerge suddenly. By leveraging weak language supervision and multimodal data, these systems can generalize well to unseen scenarios, making them robust and versatile tools in pest management.

Collectively, this cluster of research contributes to the development of systems that identify and classify pests and suggest or implement mitigation strategies, thereby enhancing the resilience and sustainability of agricultural practices.

Representative papers:

- Feuer B, Joshi A, Cho M, Chiranjeevi S, Deng ZK, Balu A, Singh AK, Sarkar S, Merchant N, Singh A, Ganapathysubramanian B, Hegde C (2024) Zero-shot insect detection via weak language supervision. The Plant Phenome Journal, 7(1): e20107. doi:10.1002/ppj2.20107 (21 May 2024)

- Young TJ, Chiranjeevi S, Elango D, Sarkar S, Singh AK, Singh A, Ganapathysubramanian B, Jubery TZ (2024) Soybean canopy stress classification using 3D point cloud data. Agronomy, 14(6): 1181. doi:10.3390/agronomy14061181 (8 April 2024)

- Singh N, Khan RR, Xu W, Whitham SA, Dong L (2023) Plant virus sensor for the rapid detection of bean pod mottle virus using virus-specific nanocavities. ACS Sensors, 8(10): 3902-3913. doi:10.1021/acssensors.3c01478 (22 September 2023)

- Kar S, Nagasubramanian K, Elango D, Carroll ME, Abel CA, Nair A, Mueller DS, O'Neal ME, Singh AK, Sarkar S, Ganapathysubramanian B, Singh A (2023). Self-supervised learning improves classification of agriculturally important insect pests in plants. The Plant Phenome Journal, 6(1): e20079. doi:10.1002/ppj2.20079 (18 July 2023)

- Chiranjeevi S, Sadaati M, Deng ZK, Koushik J, Jubery TZ, Mueller D, O'Neal ME, Merchant N, Singh A, Singh AK, Sarkar S, Singh A, Ganapathysubramanian B (2023) Deep learning powered real-time identification of insects using citizen science data. arXiv preprint arXiv:2306.02507 (4 June 2023)

- Saadati M, Balu A, Chiranjeevi S, Jubery TZ, Singh AK, Sarkar S, Singh A, Ganapathysubramanian B (2023). Out-of-distribution detection algorithms for robust insect classification. arXiv preprint arXiv:2305.01823 (2 May 2023)

Moonshot 2: Multi-Modal Foundational Models

The focus of this moonshot is on collecting multimodal data, and creating and refining foundational AI models that integrate various data modalities, including visual, spectral, and textual data, to support a wide range of agricultural applications. In this year, we explored the development of large-scale, multimodal datasets and the application of these datasets to train AI models capable of performing complex tasks in agriculture.

The development of multimodal foundational models is a transformative approach in AI for agriculture, addressing the need

for comprehensive and versatile systems that can process and analyze diverse data types. These models are designed to handle

data from multiple sources, such as hyperspectral imaging, 3D point clouds, and textual descriptions, and to integrate this

information to make informed decisions. One significant contribution is the creation of large-scale datasets like the

Arboretum,

which provides a rich collection of biodiversity-related data across multiple modalities.

Such datasets are crucial for training foundational models that can generalize across different agricultural contexts,

enabling tasks such as species identification, plant stress detection, and biodiversity monitoring with high accuracy.

The integration of multimodal data into AI models allows for the extraction of richer, more nuanced information than single-modality models. For example, by combining hyperspectral leaf reflectance data with 3D structural data, models can better understand plant health and stress factors, leading to more precise and actionable insights. The research on class-specific data augmentation further enhances these models by generating synthetic data that improves the models' robustness to variations in the input data, such as changes in lighting or plant orientation. Moreover, the use of zero-shot and few-shot learning techniques within these models is particularly innovative, enabling the models to adapt to new tasks or datasets with minimal additional training. This is essential for agricultural applications where new data types or conditions frequently arise, such as novel plant diseases or changing environmental conditions.

Representative papers:

- Arshad MA, Jubery TZ, Roy T, Nassiri R, Singh AK, Singh A, Hegde C, Ganapathysubramanian B, Balu A, Krishnamurthy A, Sarkar S (2024) AgEval: A benchmark for zero-shot and few-shot plant stress phenotyping with multimodal LLMs. arXiv preprint arXiv:2407.19617 (29 July 2024)

- Yang CH, Feuer B, Jubery TZ, Deng ZK, Nakkab A, Hasan MZ, Chiranjeevi S, Marshall K, Baishnab N, Singh AK, Singh A, Sarkar S, Merchant N, Hegde C, Ganapathysubramanian B (2024) Arboretum: A large multimodal dataset enabling AI for biodiversity. arXiv preprint arXiv:2406.17720 (25 June 2024)

- Saleem N, Balu A, Jubery TZ, Singh A, Singh AK, Sarkar S, Ganapathysubramanian B (2024) Class-specific data augmentation for plant stress classification. arXiv preprint arXiv:2406.13081 (18 June 2024)

- Tross MC, Grzybowski MW, Jubery TZ, Grove RJ, Nishimwe AV, Torres-Rodriguez JV, Sun G, Ganapathysubramanian B, Ge Y, Schnable JC (2024) Data driven discovery and quantification of hyperspectral leaf reflectance phenotypes across a maize diversity panel. The Plant Phenome Journal, 7(1): e20106. doi:10.1002/ppj2.20106 (16 June 2024)

-

Young TJ, Jubery TZ, Carley CN, Carroll M, Sarkar S, Singh AK, Singh A, Ganapathysubramanian B (2023)

Canopy fingerprints

for characterizing three-dimensional point cloud data of soybean canopies. Frontiers in Plant Science, 14: 1141153. doi:10.3389/fpls.2023.1141153 (28 March 2023)

Moonshot 3: Multi-Agent Adaptive Sensing

This cluster encompasses research on the deployment of multiple agents, such as robots and sensor networks, that collaborate to perform adaptive sensing tasks in agricultural environments.

Multi-agent adaptive sensing is a challenging goal approach in precision agriculture, where multiple robotic or sensor agents are deployed to monitor and interact with the agricultural environment autonomously. These agents are equipped with AI algorithms that enable them to make real-time decisions about where, when, and how to collect data, thereby optimizing the sensing process to ensure comprehensive coverage and high-quality data collection.

One of the core components of this cluster is the development of algorithms for multi-robot adaptive sampling and informative path planning. These algorithms allow robots to coordinate their movements and data collection strategies based on real-time environmental data, enabling efficient monitoring of large agricultural areas. For example, in scenarios where environmental conditions such as soil moisture or crop health vary significantly across a field, these robots can adapt their sampling patterns to focus on areas of higher variability, ensuring that critical data is captured. We explored challenges of deploying sensors in cluttered or complex environments, such as dense crop fields or orchards. Innovative solutions like autonomous crop monitoring systems that insert sensors into these environments without human intervention are explored. These systems are designed to navigate through obstacles and position sensors optimally, ensuring that data is collected from all necessary locations.

Another important aspect of this cluster is the integration of predictive models with adaptive sensing systems. By using reinforcement learning-based approaches, these systems can predict environmental dynamics and adjust their data collection strategies accordingly. This is particularly useful in situations where the environment is constantly changing, such as in response to weather patterns or crop growth stages.

Additionally, activities in this moonshot explores the concept of next-best-view planning, where sensors or robots are guided to positions that maximize the information gained from each observation. This approach is critical for applications such as fruit sizing or disease detection, where the ability to view an object from multiple angles can significantly improve measurement accuracy.

Representative papers:

- Chen, L., et al. (2024) Distributed multi-robot source seeking in unknown environments with unknown number of sources. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). (Submitted)

- Kailas, S., et al. (2024) Integrating multi-robot adaptive sampling and informative path planning for spatiotemporal environmentpPrediction. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). (Submitted)

- Deolasee, S., et al. (2024) DyPNIPP: Predicting environment dynamics for RL-based robust informative path planning. 8th Annual Conference on Robot Learning (CoRL) 2024. (Submitted)

- Kim CH, Lee M, Kroemer O, Kantor G (2024) Towards robotic tree manipulation: Leveraging graph representations. IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, pp. 11884-11890. doi:10.1109/ICRA57147.2024.10611327 (8 August 2024)

- Freeman H, Kantor G (2023) Autonomous apple fruitlet sizing with next best view planning. 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, pp. 15847-15853. doi:10.1109/ICRA57147.2024.10610226 (8 August 2024)

- Yin S, Dong L (2024) Plant tattoo sensor array for leaf relative water content, surface temperature, and bioelectric potential monitoring. Advanced Materials Technologies, 9(12): 2302073. doi:10.1002/admt.202302073 (18 April 2024)

- Lee M, Berger A, Guri D, Zhang K, Coffey L, Kantor G, Kroemer O (2024) Towards autonomous crop monitoring: Inserting sensors in cluttered environments. IEEE Robotics and Automation Letters, 9(6): 5150-5157. doi:10.1109/LRA.2024.3386463 (8 April 2024)

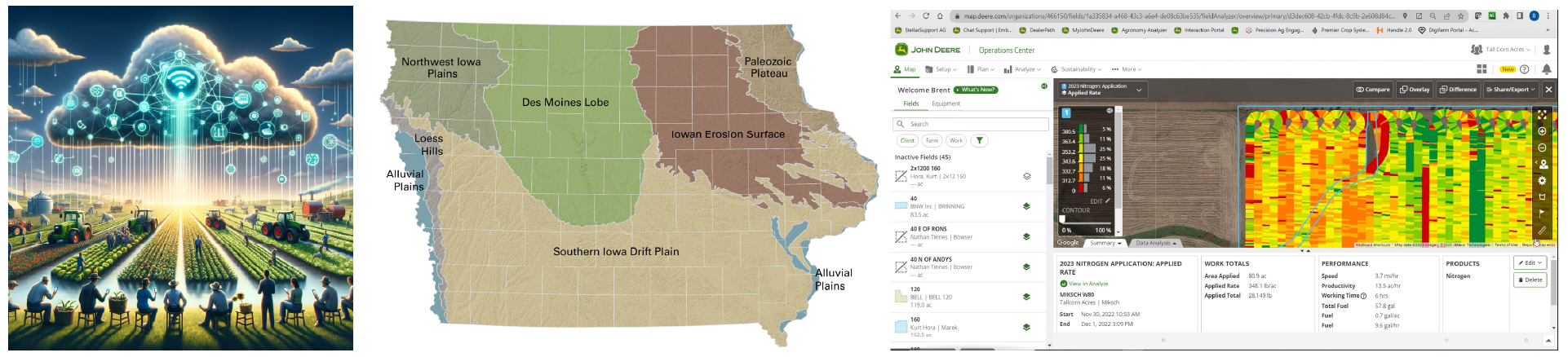

Moonshot 4: Data Co-operative

The Data Co-op cluster focuses on the creation, sharing, and governance of agricultural data through cooperative frameworks. The research in this cluster addresses issues related to data standardization, accessibility, data sovereignty, and collaborative research efforts, aiming to foster broader AI adoption and innovation in agriculture.

The concept of a Data Co-op in agriculture is centered around the idea of collaborative data sharing and governance, where stakeholders, including farmers, researchers, and institutions, contribute to and benefit from a shared pool of agricultural data. This moonshot emphasizes the importance of data standardization, open-access platforms, and the ethical use of data to drive innovation in AI-driven agricultural practices. One contribution this year was the development of frameworks that combine translational research with behavioral economics to understand and promote the adoption of AI technologies in agriculture. This research highlights the challenges and opportunities in creating data-driven solutions that are accessible and beneficial to a wide range of users, from smallholder farmers to large agricultural enterprises.

Data governance and sovereignty are also major themes within this cluster. Research on Indigenous data sovereignty, for example, explores how tribal agricultural data needs can be met while respecting the rights and traditions of Indigenous communities. This work underscores the importance of ethical considerations in data sharing and the need for governance models that empower all stakeholders, particularly marginalized groups.

The integration of AI with data-sharing platforms facilitates the annotation and standardization of diverse agricultural datasets. This not only improves the usability and interoperability of data but also supports the creation of more robust and generalizable AI models. Finally, we put in significant effort in the creation of common datasets, such as resequencing-based genetic marker datasets for global maize diversity, 3D point cloud datasets, and large multi-modal datasets. These datasets are critical for example, to enable large-scale genomic studies and breeding programs that can lead to the development of more resilient and productive crop varieties. By making such data widely available through cooperative frameworks, researchers and breeders can collaborate more effectively, accelerating the pace of agricultural innovation.

Representative papers:

- Segovia, M.S., et al. (n.d.). Combining translational research and behavioral economics to study AI adoption in agriculture. [Publisher Unknown]

- Shrestha, N., et al. (2024). Crop performance aerial and satellite data from multistate maize yield trials. bioRxiv. [DOI pending as of May 9, 2024]

- Jennings, L., et al. (2024) Intersections of indigenous data sovereignty and tribal agricultural data needs in the US. Tucson, AZ: Collaboratory for Indigenous Data Governance. doi:10.6084/m9.figshare.26156170

- Feuer B, Liu Y, Hegde C, Freire J (2024) Open-source column type annotation using large language models. Proceedings of the VLDB Endowment, 17(9): 2279-2292. doi:10.14778/3665844.3665857 (6 August 2024)

- Yanarella CF, Fattel L, Lawrence-Dill CJ (2024) Genome-wide association studies from spoken phenotypic descriptions: A proof of concept from maize field studies. G3: Genes|Genomes|Genetics, 14(9): jkae161. doi:10.1093/g3journal/jkae161 (5 August 2024)

- Tross MC, Grzybowski MW, Jubery TZ, Grove RJ, Nishimwe AV, Torres-Rodriguez JV, Sun G, Ganapathysubramanian B, Ge Y, Schnable JC (2024) Data driven discovery and quantification of hyperspectral leaf reflectance phenotypes across a maize diversity panel. The Plant Phenome Journal, 7(1): e20106. doi:10.1002/ppj2.20106 (16 June 2024)

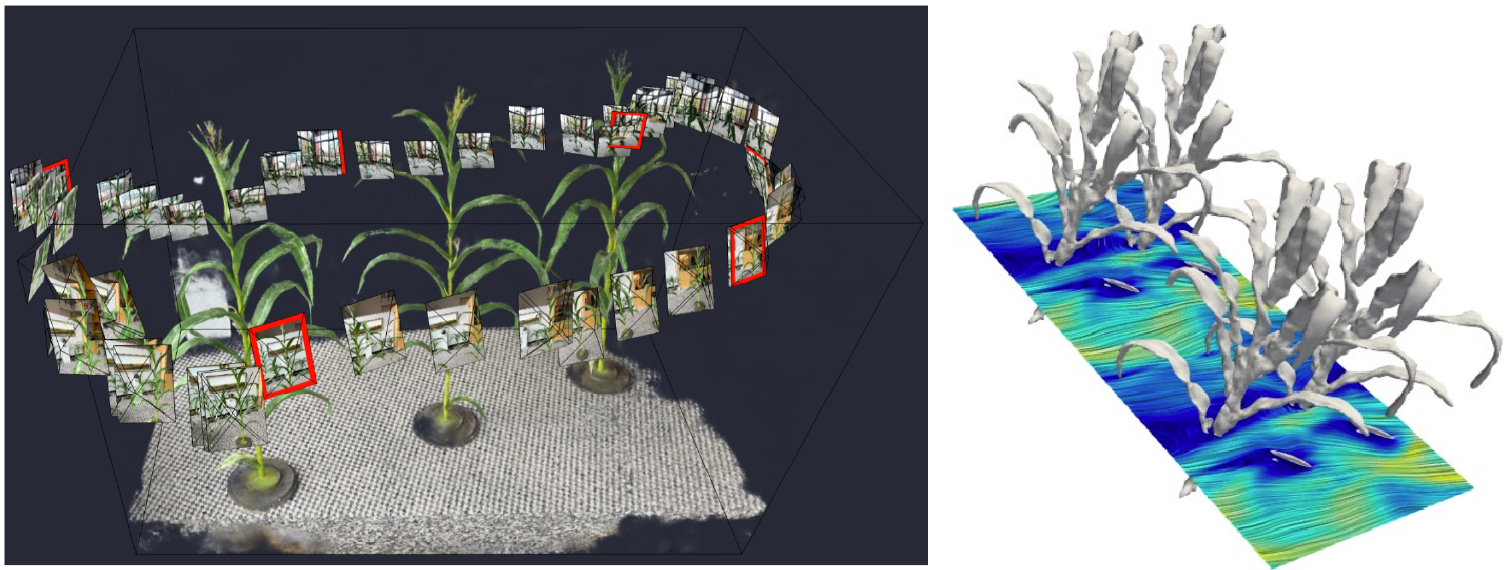

Moonshot 5: 3D DT Modeling and Design

We're using 3D modeling and virtual reality to create realistic plant models. Using cutting-edge technology like 3D modeling and virtual reality to create digital versions of plants, our digital twins allow researchers and farmers to test how plants will grow under different conditions, helping them design better crops. By combining AI and physics-based simulations, we're pushing the boundaries of what's possible in crop breeding and farm management.

Representative papers:

- Arshad MA, Jubery TZ, Afful J, Jignasu A, Balu A, Ganapathysubramanian B, Sarkar S, Krishnamurthy A (2024) Evaluating neural radiance fields for 3D plant geometry reconstruction in field conditions. Plant Phenonics, 6: 0235. doi:10.34133/plantphenomics.0235 (9 September 2024)

Broadening Participation (BP)

AIIRA's diverse and inclusive group will continue to cultivate a culture of inclusive excellence across all aspects of the project to support our broadening participation goals. Our approach starts from recruitment practices through onboarding and training practices, and extends to our communication and teamwork. In conjunction with the AIIRA learning community, inclusive excellence will be ingrained in our mentoring and advising practices.

AIIRA is uniquely positioned to positively impact the Native American community - a traditionally under-served community. We also build on our strengths to increase the participation of women in the AI-Ag ecosystem. These serve as our signature activities. Additionally, AIIRA leverages and expands upon the sustained efforts of our members and partners to reach an array of underserved communities. For instance, the data and software carpentries provide training in computing and data science and reach thousands of participants each year. This will provide a streamlined vehicle for AIIRA broadening participation activities.

Education and Workforce Development (EWD)

AIIRA's perspective on education and workforce development starts with a vision. We believe advancing AI in agriculture requires a skilled and diverse workforce capable to do today's work as well as to imagine the future and get us there.

To bring current scientists, engineers, farmers, and industries into a data-driven reality requires access to new ideas, skills, and tools envisioning our education and workforce development effort as a pathway that targets learners across all academic levels into the new discipline of Cyber Ag Systems.

The universities involved in AIIRA have been providing education across all these levels and disciplines for some time. We are uniquely positioned to define how to educate the Ag workforce of the future through signature educational activities that form an integrated pathway for Cyber Ag Systems.

Collaboration and Knowledge Transfer (CKT)

AIIRA will create a learning community to deeply integrate collaborative research across disciplines. This will serve as the socio-technical infrastructure that enables collaboration and knowledge sharing for the project. Our convergent team science approach will create a common ground and expectations among the team members. This is crucial to create an inclusive culture. Different team members will lead regular meetings to educate others about their field and to identify connections to other community members. The outputs of this learning community, such as short tutorials, syllabi, and other curricular material (e.g. case studies), will be easily incorporated in other classes, workshops, and development activities.